Django 1.7 and Scrapy

Jan 03, 2016 · 3 Min Read · 2 Likes · 6 Comments

This post is deprecated. Use it at your own risk.

Today I am going to share how to use Scrapy and Django together to crawl to a website and store scraped data to Database using Django.

Project Setup: Django

First, let us build a Django application using the following commands.

pip install django==1.7

django-admin.py startproject example_project

cd example_project

Inside the example_project directory, we will create a django app named app:

python manage.py startapp app

Then, we will update the models.py like this:

from django.db import models

class ExampleDotCom(models.Model):

title = models.CharField(max_length=255)

description = models.CharField(max_length=255)

def __str__(self):

return self.title

Now we shall update the admin.py inside the app directory:

from django.contrib import admin

from app.models import ExampleDotCom

admin.site.register(ExampleDotCom)

Update INSTALLED_APPS of settings.py like:

INSTALLED_APPS += ('app',)

Now, we will run the following commands in project directory:

python manage.py makemigrations

python manage.py migrate

python manage.py createsuperuser

The last command will prompt to create a super user for the application. Now we will run the following command:

python manage.py runserver

It will start the django application.

Django part is complete for now. Lets start the scrapy project.

Project Setup: Scrapy

In separate directory, we will create a scrapy project using the following commands:

pip install Scrapy==1.0.3

scrapy startproject example_bot

To use with Django application from scrapy application, we shall update its settings.py inside example_bot project directory:

import os

import sys

DJANGO_PROJECT_PATH = 'YOUR/PATH/TO/DJANGO/PROJECT'

DJANGO_SETTINGS_MODULE = 'example_project.settings'

sys.path.insert(0, DJANGO_PROJECT_PATH)

os.environ['DJANGO_SETTINGS_MODULE'] = DJANGO_SETTINGS_MODULE

BOT_NAME = 'example_bot'

SPIDER_MODULES = ['example_bot.spiders']

To connect with django model, we need to install DjangoItem like this:

pip install scrapy-djangoitem==1.0.0

Inside example_bot directory, we will update the items.py file like this:

from scrapy_djangoitem import DjangoItem

from app.models import ExampleDotCom

class ExampleDotComItem(DjangoItem):

django_model = ExampleDotCom

Now we will create a crawl spider named example.py inside spiders directory:

from scrapy.spiders import BaseSpider

from example_bot.items import ExampleDotComItem

class ExampleSpider(BaseSpider):

name = "example"

allowed_domains = ["example.com"]

start_urls = ['http://www.example.com/']

def parse(self, response):

title = response.xpath('//title/text()').extract()[0]

description = response.xpath('//body/div/p/text()').extract()[0]

return ExampleDotComItem(title=title, description=description)

Now we shall create an pipeline class like this inside pipelines.py:

class ExPipeline(object):

def process_item(self, item, spider):

item.save()

return item

Now we need to update the settings.py with this:

ITEM_PIPELINES = {

'example_bot.pipelines.ExPipeline': 1000,

}

Project structure will be like this:

├── django1.7+scrapy

│ ├── example_bot

│ │ ├── __init__.py

│ │ ├── items.py

│ │ ├── pipelines.py

│ │ ├── settings.py

│ │ └── spiders

│ │ ├── __init__.py

│ │ └── example.py

│ └── scrapy.cfg

└── example_project

├── manage.py

├── app

│ ├── __init__.py

│ ├── models.py

│ ├── admin.py

│ └── views.py

└── example_project

├── __init__.py

├── settings.py

└── urls.py

Now we shall run the application using the following command:

scrapy crawl example

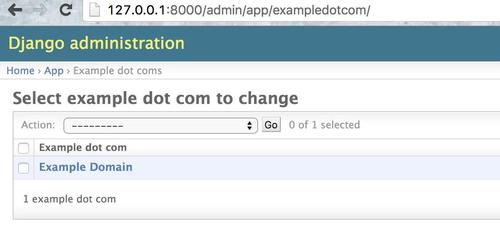

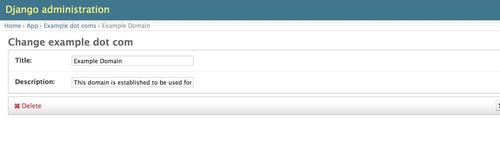

Now let us return to the running django application. If everything above is done correctly, then we shall see an object of ExampleDotCom class has been created like the below screenshot in this url http://localhost:8000/admin/app/exampledotcom/:

Screenshots

Thats all. Up and running django 1.7 + Scrapy project.

Drawbacks: Only implemented using django 1.7

Source: https://github.com/ruddra/django1.7-scrapy1.0.3

Got help and clues from this Stack Overflow link.

Last updated: May 04, 2025

I’m using Django 1.9 and I had to add

import django django.setup()to scrappy settings.py for it run. ThanksJust in case if you are get blocked, you can use a free proxy with thousands of IP addresses for Scrapy at https://proxy.webshare.io/

where should i put it?

i still get error,,using django 2.0

with django 2.x worked code is

sys.path.append(os.path.dirname(os.path.abspath('.'))) os.environ['DJANGO_SETTINGS_MODULE'] = 'my_project.settings' import django django.setup()There is error that no module app ..where should i add the app module?